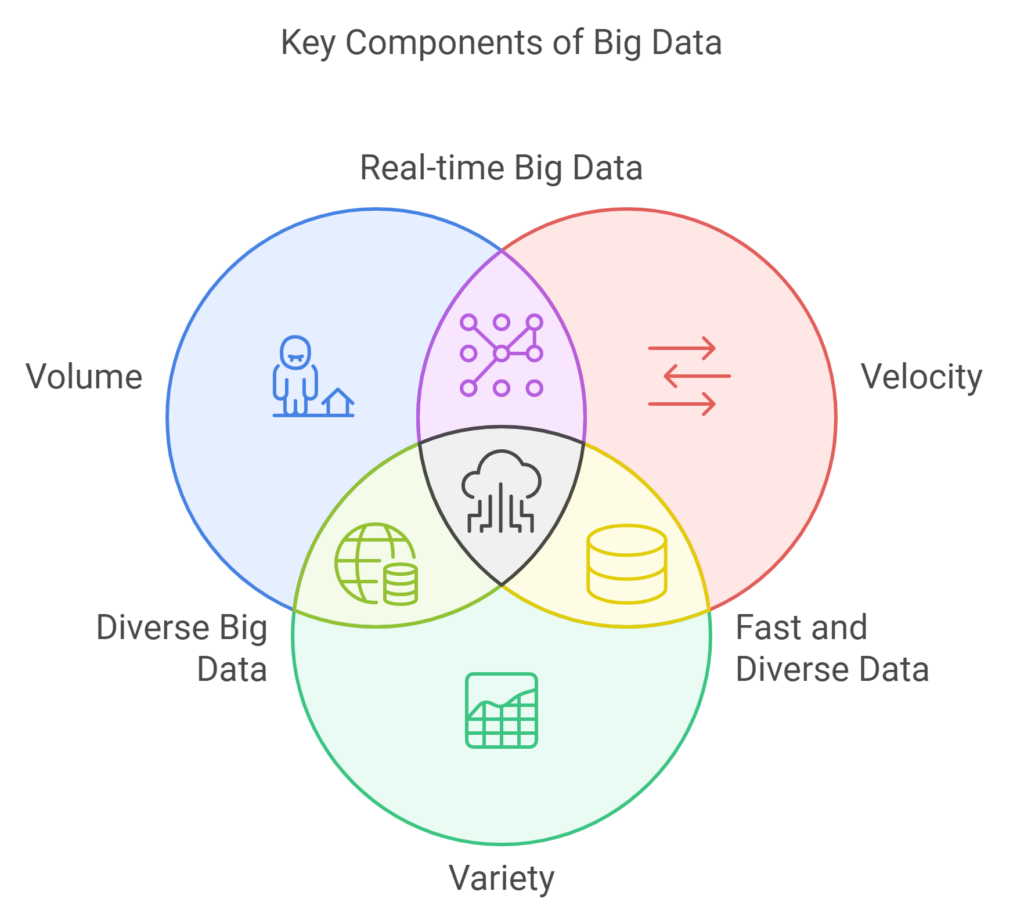

a new way of using data has come to the forefront. This new approach is called “Big Data.” It’s based on three key elements: volume, velocity, and variety. Knowing these 3Vs is key for businesses to use data wisely and stay competitive.

The amount of data businesses collect is growing fast. This makes it hard to manage and find useful information. Companies deal with all kinds of data, from sales numbers to social media posts. They need to be able to handle and understand this diverse data well.

The speed at which data comes in is also important. Businesses must quickly process and act on this data. This is crucial for keeping up with market changes and customer needs.

Lastly, the variety of data types and sources is a big part of big data. Companies face data from many places, like databases and social media. They need to mix and analyze this data to find important insights.

Key Takeaways

- Big Data is defined by three essential characteristics: volume, velocity, and variety.

- The growing volume of data generated and collected by businesses presents challenges in managing and extracting insights.

- The speed at which data is generated and the need for real-time processing and decision-making are critical factors in the world of Big Data.

- The variety of data formats and sources that organizations must contend with is a defining characteristic of Big Data.

- Understanding the 3Vs of Big Data is crucial for organizations seeking to harness the power of this valuable resource and stay ahead of the competition.

What is Big Data?

In today’s digital world, the amount of data created is huge. This vast amount of information is called “big data.” It’s important to understand what big data is and why it matters today.

Defining the Concept of Big Data

Big data is all about the 3Vs: volume, velocity, and variety. The volume of data keeps growing, doubling every two years globally. The velocity of data processing is also speeding up, with real-time analysis becoming key. The variety of data sources is broad, including everything from social media to sensor readings.

The Importance of Big Data in Today’s World

The rise of big data has changed how businesses and organizations work. It helps them make better decisions and improve their operations. Big data is used in many fields, like healthcare, finance, and retail, for things like personalized marketing and predictive analytics.

As data volume, velocity, and variety keep growing, managing big data well is crucial. Companies need to find ways to capture, store, and analyze this data. This way, they can use it to their advantage and stay ahead in the data-driven world.

The 3Vs of Big Data

When we talk about big data, three key characteristics, known as the 3Vs, come into play: volume, velocity, and variety. These elements shape the challenges and opportunities associated with managing and leveraging big data effectively.

Volume refers to the sheer amount of data being generated and collected, often in the range of terabytes or even petabytes. The rapid growth in data volume is driven by the increasing number of connected devices, social media platforms, and various other sources that continuously produce vast amounts of information.

Velocity describes the speed at which data is being created, processed, and analyzed. The accelerating pace of data generation and the need for real-time or near-real-time insights pose significant challenges for organizations in terms of data management and decision-making.

Variety encompasses the diverse types of data that are now available, including structured data (e.g., databases, spreadsheets), unstructured data (e.g., text, images, videos), and semi-structured data (e.g., web logs, XML files). This data heterogeneity requires advanced techniques and technologies to effectively manage and extract value from the vast arrays of information.

Understanding and addressing the 3Vs of big data volume, data velocity, and data variety is crucial for organizations seeking to harness the power of big data and stay competitive in today’s data-driven landscape.

Volume: Dealing with High Data Volume

In the world of big data, the amount of information is huge. The Internet of Things, social media, and digital changes have caused a big data boom. Businesses face a daily battle to manage this vast amount of data.

Understanding Data Volume in Big Data

The big data volume is a key part of big data. It’s more than what old systems can handle. This data complexity in big data makes managing big data very hard.

Here are some numbers to show how big big data is:

- By 2025, we’ll have 181 zettabytes of data, up from 33 zettabytes in 2018.

- Every day, we make 2.5 quintillion bytes of data. Ninety percent of all data was made in the last two years.

- By 2025, we’ll interact with devices nearly 4,800 times a day. This will make big data volume and big data scalability even bigger challenges.

To stay ahead, companies must learn to handle and use this high data volume. They need to process, store, and analyze it all. This is essential for any big data strategy to succeed.

Velocity: Fast Data Processing

In the world of big data, data velocity is how fast data is made, processed, and analyzed. With data growing fast, the need for fast data processing and real-time data processing is key. Businesses must handle big data well to stay ahead and make smart choices.

The main challenge with data velocity is the fast pace of data creation. From social media to sensor data, the amount is huge. To handle this, companies need the right tools to process and analyze data quickly.

Good big data management needs a mix of advanced analytics, machine learning, and scalable data processing. These technologies help businesses find valuable insights and make decisions that lead to growth and innovation.

For example, monitoring stock prices, catching fraud, or improving supply chains all need fast data processing. As data grows, the need for fast data processing and real-time data processing will grow too.

Variety: Structured and Unstructured Data

The third V of big data, variety, deals with the different types of data. This includes structured data like spreadsheets and databases. It also includes unstructured data like social media posts, images, and videos. This data variety in big data can be both a challenge and an opportunity.

Managing Data Complexity in Big Data

Dealing with this data complexity in big data needs advanced techniques and tools. Companies must be able to get insights from both structured and unstructured data. This helps them understand their business and customers better. Having good data management strategies is key to unlocking the full potential of this data variety.

Data Sources in Big Data

The data sources in big data come from many digital and analog places. Some examples are:

- Social media platforms

- Internet of Things (IoT) devices

- Surveillance cameras

- Transactional systems

- Sensor data

- Customer interactions

It’s important to manage big data from these different sources well. This is key for companies to find valuable insights and make smart decisions.

3V big data

In the world of big data, the 3Vs—volume, velocity, and variety—are key. They shape its complexity and opportunities. Understanding these elements is crucial for managing and getting insights from big data.

The volume of big data is huge, often in terabytes or petabytes. It needs new storage and powerful computers to handle. Velocity is about how fast data is made and shared, often in real-time. This speed requires quick data processing and decisions.

The variety of big data is complex. It includes many data types, like structured, unstructured, and semi-structured. Handling this variety needs special tools and a deep understanding of the data.

By tackling the 3Vs, organizations can use big data fully. They can turn raw data into insights that drive innovation and improve decisions. Managing the 3Vs well is key to using big data’s power and staying ahead in the digital world.

Big Data Analytics Tools

As data grows in volume, speed, and variety, companies use advanced big data analytics tools. These tools help find valuable insights and make better decisions. They handle data processing, storage, and advanced analytics.

Popular Tools for Big Data Analysis

Some top big data analytics tools include:

- Apache Hadoop is an open-source framework for distributed storage and processing of large data sets across clusters of computers.

- Apache Spark is A fast and general-purpose cluster computing system well-suited for big data analytics and machine learning tasks.

- Amazon Web Services (AWS) Big Data Services: A comprehensive suite of cloud-based big data analytics tools, including Amazon Athena, Amazon EMR, and Amazon Redshift.

- Microsoft Azure Synapse Analytics: A unified big data analytics service that brings together enterprise data warehousing and big data analytics.

- Google Cloud Dataflow: A fully-managed batch and streaming data processing service for building and running highly scalable data processing pipelines.

These tools help manage, analyze, and find insights in big data. They help companies understand customers, improve operations, and innovate.

“Big data analytics tools are essential for organizations looking to stay competitive in today’s data-driven landscape.”

Using big data analytics tools, businesses can understand their operations, customer behavior, and market trends better. This leads to better decision-making and improved results.

Challenges of Managing Big Data

As data grows in volume, speed, and variety, managing it becomes tough. Organizations struggle with scalability and performance. Big data’s size and complexity can overwhelm traditional systems, causing slowdowns and failures.

Scalability and Performance Issues

Scaling big data solutions is a big challenge. Companies need to make sure their systems can handle more data and faster processing. Slow data processing can slow down decision-making and limit business value.

To tackle these issues, specialized big data technologies are needed. This includes distributed computing, in-memory databases, and cloud solutions. The right tools and infrastructure can help overcome traditional system limits and unlock big data’s full potential.

FAQ

What are the three key characteristics that define big data?

Big data is defined by three main characteristics, known as the 3Vs. These are volume, velocity, and variety. These elements highlight the challenges and opportunities in managing and analyzing large, complex data sets.

Why is the volume of data important in the context of big data?

The massive volume of data is a key aspect of big data. Understanding the scale and complexity of data volume is crucial for managing and analyzing big data effectively.

How does the velocity of data impact big data management?

The velocity of big data refers to how fast data is generated, processed, and analyzed. Handling the rapid pace of data creation and movement is a significant challenge. It requires fast data processing capabilities.

What is the role of data variety in big data?

Data variety in big data includes the diverse range of data types, from structured to unstructured. Handling the complexity of data variety is a key challenge. It requires specialized tools and techniques.

What are some popular tools for big data analysis?

Popular tools for big data analysis include Apache Hadoop, Apache Spark, Tableau, and Power BI. These tools offer various capabilities for data processing, visualization, and insight extraction from large, complex data sets.

What are the main challenges in managing big data?

The main challenges in managing big data include scalability and performance issues. There’s also the overall complexity of handling the volume, velocity, and variety of data. Addressing these challenges requires innovative solutions and strategies for effective data management and analysis.